What is that? Prebuilt? Dunno which one is it. Check this?

-

AH, yes.

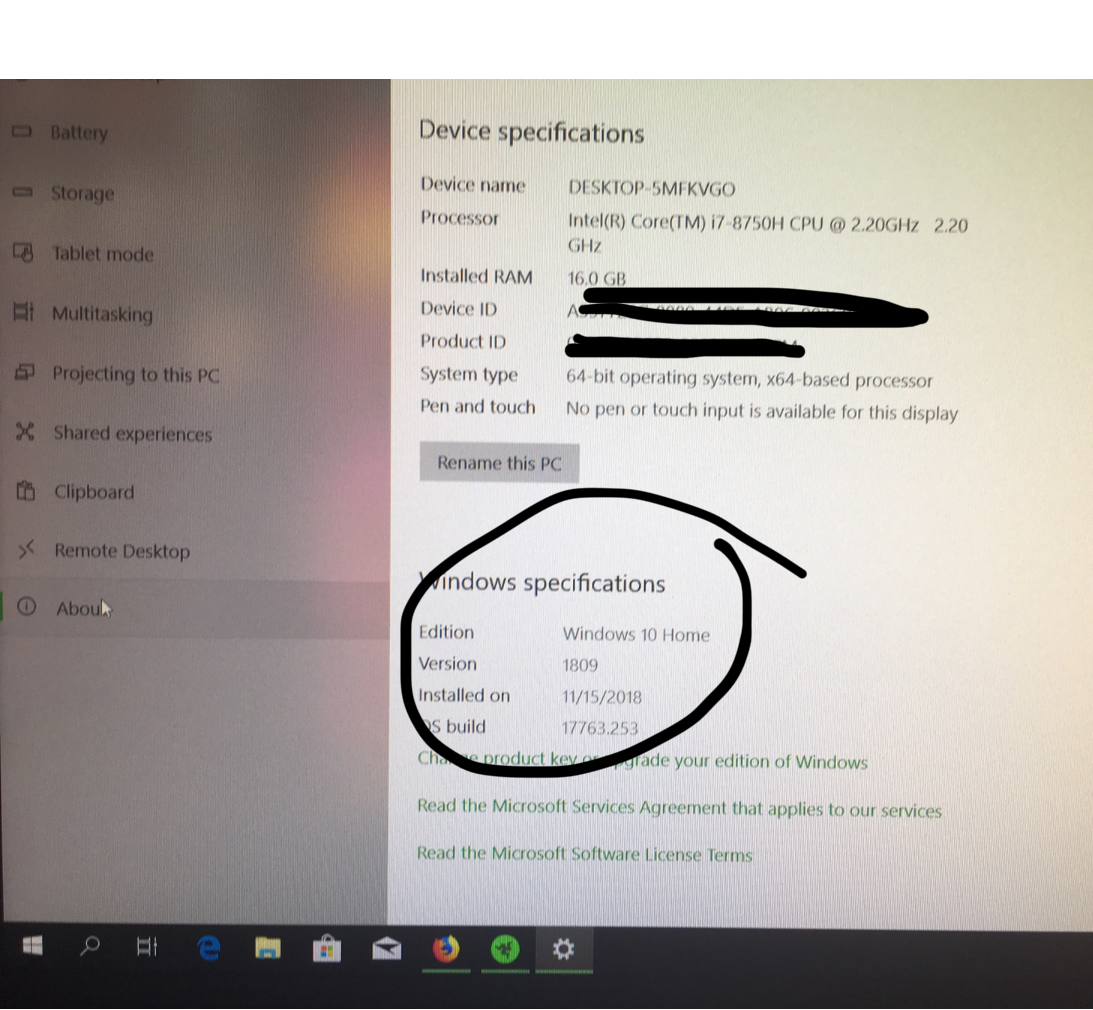

Version 1809

OS Build: 17763.292 -

I see, so I couldn’t manually from nvidia. Anyway thanks for sharing. Let’s know if there’s any problems you find

Enjoy the machine anyway.

Enjoy the machine anyway.

I believe tons tweakers watching this And waiting someone finding the issues like...

And waiting someone finding the issues like...

We like this.

We like this.

-

I'm undervolting by 100mV and running Firestrike again. I'll post the results in a min.

-

You can go to -150mV with 8750H on 3Dmark or if you’re lucky lower but not in real gaming usage. I use 8 months-130mV. Do you want to catch this

https://www.3dmark.com/fs/16524814 -

100mV underclock had negative results:

![[IMG]](images/storyImages/PzCO44b.png)

Loss of 681 points, no change other than the 100mV underclock via XTU -

What xtu graph said is there any line that crossed 90 degrees, especially on last two physical test.

And look also background tasks, when you doing something back there, it’ll also stress the cpu and lower the physical score. -

Peak temp only hit 82 degrees celcius

-

Here's my XTU settings, first time using the program TBH, if you guys have recommendations I'll gladly test for you:

![[IMG]](images/storyImages/tIzISgs.png)

-

Hmm that’s odd. Do all your updates things first until the Blade well optimized then run again. When I’m not wrong before i was also got great score and then worse, till several days after the update etc finished I got better score than the first one.

-

I've got nothing else to update, my man!

-

Yes even you don’t doing manually update, it’s windows and optimus laptop tons things works on background, programs like steam, Origins, nvidia experience may also effect benchmark score. I’m not super tweaker but I can say from my experience before. How about battery life shown on battery mark? Did you install HWinfo64 for monitoring?

-

Nah, nothing like that at the moment. I upped the fans to 100%, put it on "balanced mode", still 100mV underclock and got this score:

![[IMG]](images/storyImages/w5tf3Om.png)

I feel like it should be higher than 17,258.

Trying again with 50mV underclock, 100% fans, balanced mode, brb -

Alright, 50mV underclock, 100% fans, balanced mode gave 16,660

![[IMG]](images/storyImages/lS90o2f.png)

-

Trying gaming mode, fans 100%, 50mV underclock now....

-

You’re already beat Triton 2080maxq and with the time you’ll surpass my egpu setting, note dx11 Firestrike isn’t great benchmark for Turing Card you’ll be hardly surpass 1080 laptops performance but on pure graphics benchmark like superposition, heaven Unigine or dx12 timespy you’ll easily surpass that. -

Ah! I wasn't aware of that. Ok I'll switch to Timespy and Heaven now. Here's Firestrike results at 1080p, 50mV undervolt, fans 100%, "gaming" mode enabled:

![[IMG]](images/storyImages/KWOgW2q.png)

17,843 -

This!!

Stop at that or you tempted me getting one

-

Time Spy

50mV underclock

Fans 100%

Gaming mode enabled

1080p

7,507

![[IMG]](images/storyImages/MsXJ4aB.png) Joikansai likes this.

Joikansai likes this. -

Ah this one I can give my 2070 but it’s better than average 1080 desktop I believe.

-

Heaven benchmark

50mV underclock

Fans 100%

Gaming mode enabled

1080p

FPS: 105.5

Score: 2657

Min FPS: 9.1

Max FPS: 224.9

![[IMG]](images/storyImages/JfCl8ws.jpg)

Heaven settings

Render: DX11

Mode: 1080p 8xAA fullscreen

Quality: Ultra

Tessellation: Extreme -

@yggdra.omega did you finish with all benchmarks? You can still increase your 3Dmark especially on firestrike or maybe other benchmarks if you want.

-

I was losing steam for sure, hahaha. What are some steps to increase? I didn't know anyone else was still interested, but I'm down for more testing

-

Rise of the Tomb Raider benchmark

1080p

50mV underclock

Gaming mode via Synapse

Fans 100%

Very High default settings

DX12 enabled

FXAA

Vsync Double Buffered

![[IMG]](images/storyImages/O4uy1JL.jpg) Joikansai likes this.

Joikansai likes this. -

Interestingly enough, here's my desktop with a STRIX 2080 Ti and 9900K, stock with identical settings as the RB15:

![[IMG]](images/storyImages/bOEv2Cy.jpg) Joikansai likes this.

Joikansai likes this. -

Ashes of the Singularity benchmarks:

DX12 GPU Focused - Crazy Settings - 1080p - Vsync off - Gaming mode enabled via Synapse - Fans 100% - 50mV underclock

![[IMG]](images/storyImages/QMjpyu5.jpg)

DX12 CPU Focused - Crazy Settings - 1080p - Vsync off - Gaming mode enabled via Synapse - Fans 100% - 50mV underclock

![[IMG]](images/storyImages/MJ4CilK.jpg)

DX11 GPU Focused - Crazy Settings - 1080p - Vsync off - Gaming mode enabled via Synapse - Fans 100% - 50mV underclock

![[IMG]](images/storyImages/M2O4gHs.jpg) Joikansai likes this.

Joikansai likes this. -

My desktop for comparison

DX12 GPU Focused - Crazy Settings - 1080p - Vsync off - Stock clocks - ROG STRIX RTX 2080Ti - i9 9900K

![[IMG]](images/storyImages/Q3dLSct.jpg)

DX12 CPU Focused - Crazy Settings - 1080p - Vsync off - Stock clocks - ROG STRIX RTX 2080Ti - i9 9900K

![[IMG]](images/storyImages/XcxMPY6.jpg) Joikansai likes this.

Joikansai likes this. -

Yes we’re doing gaming not firestrike. On my egpu setting my firestrike is also waay under anyone here who put firestrike scores on their signature, but on real gaming usage in some titles I get similar or even better FPS than desktop or notebook dgpu FPS that listed on notebookcheck. My reaction was like you “interesting“

You can bump your performance by doing OC scanner on afterburner and look +value on core you achieved, and from that increasing a bit until benchmarks like firestrike crashing. Is your cpu undervolting value only max-50mv, it could be also decreased lower imo, because my 8750H can easily stable on -120, I can use for firestrike even -160 but for daily I use -130, since lower than that may crash the game sometimes.hmscott likes this. -

I've been considering picking up one of these new RTX blades but I have one concern

All my benchmarking in actual games on the 2018 razer blade with 1070 showed me the surprising result that the CPU would thermal throttle to such a degree (in most games locked at baseclock 2.2ghz) that I was largely CPU bottlenecked. And yes, that's even with 130mv undervolt. Assassin's creed origins and odyssey were a good example of this. Easy to get great burst performance in benchmarks but the sustained gaming performance was very compromised by CPU thermals.

Are you able to test some real world games and use a hardware monitor to test your CPU and GPU temps? And to see what CPU frequency the laptop is able to hold in some triple A titles?

I don't want to spring for an upgrade to turing if the CPU throttling is such that I continue to be CPU bottlenecked anywayhmscott likes this. -

What are your max temperatures and FPS when you're gaming ?

AC:Odyssey is a GPU intensive game that struggles to reach 50fps on my normal 1070, so I doubt that it's your CPU that is limiting you in that game.

For Origin it was better but I had dips in the 50ish fps with my 1070, again GPU limited.

Sadly, many laptops out there are struggling to cool the 8750H, still don't understand why max-Q laptop just don't go with the 4C/8T i5 coffee lake chips. But repasting + undervolting greatly helps.hmscott likes this. -

So I've been a long time lurker on the forums. Thought I'll help you resolve this issue. I also have a Blade 15 with 1070 maxq. But my clocks stay at 3.4 ghz consistently.

I use throttle stop to UV by 135, set my clocks like 38x on 1-2 cores, 36x on 3-4 cores, 34x on 5-6 cores. I also uncheck BD PROCHOT, manually set speedshift at 64. And run games on gaming mode synapse throughout. Recently on Anthem demo it runs fine with avg. temps on 80C and max temps around 90C. No thermal throttling or power limit throttling. And we all know the Anthem demo is poorly optimized and pretty CPU heavy.hmscott likes this. -

Marketing, probably. The i7 brand is associated with high performance (be that true or not). The same reason some notebooks are sold with i9 (super duper ultra maximum performance) or i7 U CPUshmscott likes this.

-

So the CPU maxes out at 100C in CPU intensive games such as AC once it reaches steady state, at least until the CPU throttles down to baseclock and it might come down to the 90s (and again, this is with -130mv undervolt). FPS depends what's on screen, but when it's at the 2.2 ghz baseclock can dip into the 40s-50s, if the CPU clocks up, the FPS gets into the 60s-70s (this is with tweaked settings, mix of high and medium, not everything on ultra). Changing the graphics settings up to ultra has no impact on the frames, and given the huge thermal throttling on the CPU and big steps in framerates when the CPU briefly clocks up, I can only conclude I'm CPU limited.

Assassin's creed origins is kind of a worst case scenario for the blade though (due to it's built in DRM/denuvo/virtualization it overuses the CPU), most other games don't suffer as much. But I get thermal throttling of the CPU to 2.2ghz in everything I play, if I play it long enough for the laptop to reach steady state thermals (AKA once everything has fully warmed up).

I couldn't agree more about using a 4 core i5 CPU for gaming. I experimented once by using windows' ability to disable CPU cores on a chip, and the temps were much better. But, disabling the cores also disables turbo boost, so I was still locked at 2.2ghz baseclock and couldn't get any performance gains. Having the 6 core is nice in principle if you have creator' type applications that only heavily load the CPU or GPU one at a time, as the blade can cool either one, just not both, adequately.

Does repasting really help? I have read that the thermal paste on the blade advanced is pretty decent- the only way to get a big improvement is by using liquid metal which is not recommended due to the aluminum vapor chamber material, which can bond with liquid metal. But, I haven't been bold enough to test it for myself, so that is just what I have heard.

My hope was that the new RTX blades, specifically the ones with the 2070 or 2060 max Q, would be relatively more power efficent and thus generate less heat, allowing more thermal headroom for the CPU. But the TDP on the 2060, 2070, and 2080 max Q on nvidia spec sheets is listed as 80 watts for all of them. Difference seems to be CUDA cores and clock speeds. Since neither the GPU nor CPU has need a process node shrink in this generation, I suspect the new blades will throttle just as badly.

I think the real machine to get will be a refresh of the blade when intel moves to 10nm, as the same chassis might be able to do the job then. But, knowing PC manufacturers, they'll just shrink the laptops down even more to be macbook air sized and they'll still be inadequately cooled and throttle.hmscott likes this. -

Which profile do you use on AC Odyssey?If gaming mode, I wouldn’t recommend it. It’s overclocking mode, rise the gpu core and memory on higher clock, for cpu non demanding esports titles like OW you can use it not on triple A like AC Franchises, SoTR, FFXV etc. I could keep the cpu around +-90 on playing session AC Odyssey and then SoTR. Without disabling turbo boost nor limiting clock amplifier on factory thermal paste on old 1070maxq Blade, balanced 4800rpm, @1060p high 60fps, down to low 50ish in town. Spiking to high 90 was not on gaming but on load scenes only for a second, it’s expected on this titles as mentioned above beside rendering graphics data it renders also a lot drm layer.

After 9 months with still factory thermal paste I did test with resetting tweak like undervolting to make it similar with notebookcheck review unit out of the box plus Synapse gaming mode for max cpu tdp. The result was better than notebookcheck review unit doing similar benchmark.

I do this usually after a year to decide repasting or not and since I’ve still rest warranty over a year, try to not doing that, since my old Blade 14 has also similar temperatures and hold around 2 years gaming usage before I sold it.

So I think Blade 15 2019 should be able doing this, and with good monitoring it’ll also hold long.Vistar Shook and hmscott like this. -

I ordered the 2080 razer gtx, anyone know what brand of SSD is used? Samsung 970 pro?

-

I would be tempted to order one if they offered a 4K screen with the 2080 instead of the 2070. Although i suspect they will do the 4K OLED with the 2080. If so, that's a buy for me

-

Me to me to

, to me plus new ice lake cpu, is a buy to me. New 2080maxq seems deliver 30 to 50% performance bump over last gen most powerful 1070maxq but I want more

, to me plus new ice lake cpu, is a buy to me. New 2080maxq seems deliver 30 to 50% performance bump over last gen most powerful 1070maxq but I want more

Usually Razer put slower nvme on 256 and faster one on higher storage, advanced 2018 256gb model was pm961 and 512gb one was pm981 which I think is around 970Evo speed, I doubt it’s 970 Pro speed ssd in oem model. -

yeah, my 2018 advanced came with Samsung 981 oem

-

You'll get a Samsung PM981

-

I couldn't agree more and have never understood this as well. In addition, to using 4 core 45W chips, I'd be interested in at least understanding how gaming laptops with max-q would perform with 4 core 15W chips as well from both a performance and battery life perspective.

As an example, the surface book 2 15" has a 4 core 15W chip with a regular 1060 and if I remember right it's benchmarks were in line with 4 core 45W gaming laptops and it has unbelievable battery life. -

It’s impossible it’ll be same with 45 watt gaming laptop maybe with 1050ti or Dell XPS15 1050 like on this article. Notebookcheck didn’t even want to put longer xtu screenshot due high temperature even on low cpu load I believe.

-

Anyone have data on temps of 2070 max Q vs 2080 max Q? Nvidia slides seem to indicate the have the same TDP so I'm wondering if the thermals are any better for the 2070 version than the 2080 version. Given that the GPU is already fast enough for 1080P on the 1070, not sure if I even really want a 2080 in this chassis.

-

Since no one who owns this is actually providing us with this kind of useful information I'll do a short write up on temps, clock speeds and firestrike scores tomorrow when I receive mine

-

As someone who wrote for notebookcheck, can you link me the article you are citing that graph from? Is it this one: https://www.notebookcheck.net/Micro...0-Laptop-Review.284622.0.html#toc-performance ?Joikansai likes this.

-

Yes, same xtu screenshot. It’s equipped with 1060 but no gpu benchmark, i think know why performance would be same with 1050ti quad core 45 watt cpu in some titles with almost 3 times more expensive price tag.Vistar Shook likes this.

-

There are some benchmarks. The lower performance is brought up and blamed on power consumption and thermal limits.

"

The Witcher 3 Performance Analysis

We are obviously going to test the Surface Book 2 15 with various modern games but first let’s take a closer look at the effect the three performance profiles had on frame rates, temperatures, system noise, and power consumption when running The Witcher 3 (Ultra).

While performance differed measurably between the three, the effects were minimal. Comparing the results to the records in our benchmark database we were able to determine that even the Surface Book 2’s best results were at the lower end of the GTX 1060 spectrum. The reason for this is quite possibly the power consumption limitation imposed upon the device.

"

However, there are other benchmarks missing. This review was labeled as a "live" review, meaning it was updated as it went because it was a high-demand review. It was also translated from the original, so I suspect that the English article was simply not updated after the original version finished. (and I seem to be right: https://www.notebookcheck.com/Test-...-GTX-1060-Laptop.284558.0.html#toc-emissionen) -

Thanks for link, it’s now clear you shouldn’t use it for gaming. It states they will test it further more, especially for different power setting because it may damage the pheriperals and components that comes with it under full load.

Die restlichen Tests und Messungen werden wir in den nächsten Tagen durchführen. Unser Augenmerk liegt dabei vor allem auf den Auswirkungen der verschiedenen Energieeinstellungen auf die Leistung und natürlich den Stromverbrauch. Denn hier könnte das mitgelieferte Netzteil unter Last in Bedrängnis kommen. -

Finishing up some stress testing now on my 2080max q. Initial impressions: This thing stay cool cool cool under max load with fans ramped up to 100%, something my 1070max q could not achieve

Game Mode set in Razer Synapse

Fan Speed set to Manual 100%

Throtttle Stop -100Mv

Last edited: Feb 6, 2019hmscott, Vistar Shook and Joikansai like this. -

Great score and temperature, pretty promising. It still can go further by running OC Scanner on afterburner in game mode condition save the result on profile run again, if you want higher score.

Notebookcheck reviewer should learn from you how to run proper gaming benchmarks on Blade laptop oh his 2070 review, cpu (physical) performance is too low for 8750H, most probably on balanced mode, that limit cpu tdp. -

Im pretty happy with the score, I mainly run it just for a quick check on temps, power limiting, all those goodies.

Tonight I'll game for a few hours with the auto fan profile activated and share the results with you guys so you can get some real world temps.

On my razer 1070 max q I was hitting about 85c on the gpu and running as high as 90c on the processor after gaming for a few hours, this is with Throttlestop enabled and fans running at 100% plus a laptop cooling pad.

Razer Blade 15 RTX update

Discussion in 'Razer' started by Joikansai, Jan 7, 2019.