-

Did you disable AVX / FMA?

Exit prime95 and add these lines to the top of local.txt:

CPUSupportsAVX=0

CpuSupportsAVX2=0

CpuSupportsAVX512F = 0

CPUSupportsFMA3=0

CpuSupportsFMA4 = 0

Then reset the readings in hwinfo64 and restart prime95 small FFT's and see if the core temp differential improves, or goes away.Last edited: Jul 24, 2018 -

![[IMG]](images/storyImages/neyko0.png)

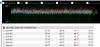

Addeed the lines in .txt file and got this results.Vistar Shook likes this. -

30 seconds is kinda short, let it run for 5 minutes or so...

It's not looking good though... too bad you didn't get a reading before re-pasting and grinding down the heatplate... sigh. -

This is what i have when i still have stock values and without a repaste.

hmscott likes this. -

That's why we use prime95 to get a stable repeatable test to share results instead of games - look at the % load jump around on all the cores - they aren't getting the same load at the same time, so you can't compare temps core to core, they will be varying already - so the variance could be due to game load or cooling issues.

What did it look like after 5 minutes or more run now? Or is that what you posted earlier, and it was only the hwinfo64 that ran for 30 seconds? You want the hwinfo64 to start and end with the prime95 run to see the results from the entire run.

Generally 2c-3c is typical best core temp differential, some are right on 0c-1c, but that's rare. Average is 5c-6c. Intel "allows" up to 10c with desktop CPU's - LGA with IHS fitment issues - so you can live with it, but your hottest core will trigger thermal throttling before the coolest core has a chance to ramp up.

It's up to you to decide how to deal with it at this point, either live with it, keep re-pasting, or ask your vendor to fix it on RMA - or if you just got it you could try to swap for a new one.

Now that you've tried to fix it yourself you likely won't be allowed to return it to swap for another one, but they should fix it for you - they may charge since you went in there on your own and mucked around, maybe making it worse by sanding the heatplate...

Sorry you are dealing with this, it's a waste of your time and you shouldn't have to fix a brand new laptop out of the box, please let us know how it works out.Pedro69 likes this. -

Also i applied the termal paste with the dot method, i think that is the best method…

hmscott likes this. -

I will left feedback when Artic and gel nano arrives.hmscott likes this.

-

Generally the fix for the core temperature differential is to get an even tightening of the screws around the attachment points so that the heatplate meets flat against the CPU die.

Angled fitment means rockering one way or the other, one half fitting better than the other, 2 cores pairing off cooler and 2 cores pairing off hotter.

You can tilt the heatplate by too thick / thin thermal pads, too thick paste build up on one half or the other, uneven screwing in of the screws - 1/4 turn at a time or smallest bite increment going across / around the heatplate.

I try to level out the paste myself, using a card to drag across and pull up as much paste as I can while still getting a thin all over coating. The thinner the better to get an even coverage and fitment. I also cut out about 1/8" around all the external edges to keep air from getting in and drying out the paste.

Eyeballing it, feeling it, measuring it, all help, and it might take a few times to get the feel of fitting it well.

Good luck. Pedro69 likes this.

Pedro69 likes this. -

I think I have same problem, but I didn't repaste it.. yet.

I didn't play games for awhile, then ran ACO and noticed high CPU temperature. I'm not sure, but I think it wasn't the case when I just bought laptop 2 months ago.

So I stressed in AIDA and saw that two of my cores run hotter than others. Came here for help and I see exactly same problem?

Stressed in Prime95 with disabled AVX / FMA - got same results.

Core #0 (1st in AIDA) runs 4-6°C hotter.

Core #2 (3rd in AIDA) runs 8-10°C hotter.

Core #4 (5th in AIDA) has same temps as others (just in this particular AIDA stress test jumped to 94° couple of times for some reason, while yesterday it was exactly same as others)

AIDA stress (7 mins):

Prime95 stress (7 mins):

Any ideas what to do? I didn't open laptop and still have that stupid "factory seal" sticker.Last edited: Jul 26, 2018 -

How do I undervolt my CPU? For some reason never done it before.

Is there a way to undervolt only one core?)

I would repaste first, ask later, but "factory seal" sticker worries me... more pisses me off, but anyway...

EDIT. Ok, I found that in throttlestop. What voltage do I test?Last edited: Jul 26, 2018 -

I offset voltage by 99.6mV in ThrottleStop and temperatures are much better (at max fan), but it didn't cool off my hot core..)

Interesting, that average core VID didn't change and was 1.03, while maximums dropped from 1.3 to 1.2

hmscott likes this. -

I have set my 8750H with 160mv without any BSoD.

-

Do you have the ac/dc loadline set to 1 in bios? You should do that if you don't. Falkentyne posted the instructions online like 10 times already over several threads... I do not undervolt at all beyond that and even running prime95 I do not get over 86 degrees on hottest core with 35 degrees room temp (that is not even with full fans - with coolerbooster it's more like 82 degrees max).hmscott likes this.

-

@prodj though I see there is a whopping 13 C difference in max temp between coolest and hottest core on the first test... not sure if that is due to aida stress test loading it unevenly or if you have some serious heatsink issues. It seems more like the latter.

-

@prodj what is the temperature in your house? I went ahead and downloaded aida64 to get a feel of what it does to my lappy... It took almost a whole minute to make it hot enough to get the fan even spinning. 73 C max on my hottest core (core 2) with coolest core being at 71 C max (core 5 - the last one). And that was before the fan started to spin haha now they are all at 69-71 C steady with a mere power draw of 58W and 2800rpms on the fan. I mean, it's like 35 degrees inside here, the aida64 is not a stress test at all lol. I do only run the cpu test, unchecked the other options.

You need to start by setting the appropriate settings in bios and see what happens, but it seems you might really have some big troubles with the heatsink and/or the paste.

PS. the screenshot only shows 4 cores for some reason but I confirmed in hwinfo that all are loaded at 100%

-

I don't know the temperature, I'm on full power A/C 24/7, should be around 25°C I guess, but when I boost fans without load - CPU can go down as low as 30°C after 5 mins.

Also I stressed almost all in AIDA (CPU, FPU, cash and memory) - try that =) -

What ac/dc load line to 1 does? I'll try to find his posts, but if you have a link - I'll appreciate that.

-

With these settings it got my cpu to max 87 degrees on core 2 shortly (less than a second), coolest cores are at 83 max. But my fan never went faster than 3400 rpms, also the load seemed super uneven as I saw it jump from 70 to over 80 and back a lot... I don't think it's a good stress test tool if you want to know the worst case scenario, prime95 is still making the thing work harder. I will shoot you a private msg about the ac/dc loadline stuff, but I don't understand it fully myself I just know setting it to 1 works like magic.

-

So, he said:

But I didn't want to disable any shenanigans and I didn't want static voltage.

Why do we need that? Is it better somehow? -

Read my private message ^^ You do not need to set static voltage or anything at all. Just setting the ac dc stuff to 1 is plenty amazing. No need to poke around with the rest.

-

Yeah, it looks very jumpy when you stress everything, but average temperatures in HWiNFo are consistent with other tests like prime, so it's still ok to see what's up if I don't want to run prime95 + HWiNFO and just want to see temp graphs.Last edited: Jul 26, 2018Pedro69 likes this.

-

I have a few questions about my frame rates using an Acer Predator X34p monitor. It's G-sync capable and I have it set to 120Hz refresh rate using a 1.2 display port cable.

1) I noticed my FPS going beyond 120 in menus and such. I thought G-sync capped it at the monitor refresh rate. How can I verify G-sync is working?

2) I'd like to OC my GPU by 5-10% for more consistent frames. Pushing 3440x1440 on the 1080 works but I'd like to squeeze as much as I can. What program can I use to reliably OC the GPU? -

As far as I know g-sync might need v-sync activated to work at high fps. I play without g-sync and honestly it's fine for me too.

Anyway, you can just use dragon center's turbo mode if you have that installed and don't want to undervolt, OR msi afterburner (which I use as I undervolt at the same time). Both will be fine. Also dragon center doesn't mess up afterburner's settings as long as you don't touch the dragon center's OC settings (I just keep it on sport mode all the time and have afterburner apply my custom frequency x voltage curve at startup - which is how I achieve the undervolt).Talamier likes this. -

So, I tried "AC/DC loadline=1" and got exactly same temperatures as default settings -125mV, even though it shows different VIDs. And temps and CPU usage are more stable.

AC/DC LoadLine = 1:

Undervolt by -125mV: (you can see spikes in temps and drops in CPU usage for some reason)

hmscott likes this.

(you can see spikes in temps and drops in CPU usage for some reason)

hmscott likes this. -

It really just depends on your room temp. I don't think the max temps are bothersome at all, especially for the cooler cores. 71C max on stress test is not bad at all on factory paste I think... what bothers me is the 9C difference which suggests that the heatsink doesn't sit right. MSI won't help with this, they can't bother unless it catches on fire or shuts down from overheating. Your best bet would be to return it if you can and hope the next one will have better fitting heatsink OR attempt to repaste and maybe sand the heatsink. I can't help you with that though.hmscott likes this.

-

You could put up Aida64 Stability Stress test as the guide in the link. I'm sure it will be a bit hotter in there

With shared heatpipes as in some models it will be a lot hotter.

prodj likes this.

With shared heatpipes as in some models it will be a lot hotter.

prodj likes this. -

hmscott likes this.

-

Overheating.

It will run even hotter if you remove the check mark in the box "Stress Cpu". And use max fans if you run stress tests.

-

Lol, no, turned out - power cable unplugged. I hate this cable, why it sooo looose???.... =(

-

Still 99C

-

Falkentyne Notebook Prophet

Hot cores:

1) CPU surface is slightly convex.

2) heatsink is convex.

3) VRM's are above cores 0, 2 and 4, and raised and cooled by VRM pads on the heatsink.

Put 1, 2 and 3 together, you get hotter cores on the vrm side.

There are only 3 fixes for this.

1) remove the 1mm thermal pads on the VRM' and replace with 0.5mm thermal pads (Arctic 0.5mm).

(and combined with 2) : replace paste with Coolermaster Gel Maker Nano or Mastergel Nano (whatever it's called).

3) sand the heatsink very lightly to help make it flat rather than convex (sand as little off as possible, you don't want to reduce heatsink pressure), and then do 1 and 2.hmscott likes this. -

Falkentyne Notebook Prophet

MSI uses an internal "Loadline Calibration" setting which removes voltage "Vdroop" at full load, which keeps voltage at load and idle very close to the same.

IA AC loadline (NOT THE SAME SETTING AS THE ABOVE !!)= Auto (or 179=1.79 mOhms) causes voltage to RISE at full load, depending on current (more current=more vrise).

IA DC loadline causes the VID reported to the system to *DROP* by 1.79 mohms of resistance based on current (more current=more VID drop); the IA DC setting does NOT affect the actual voltage going into the CPU (not fully true, but barely affects it).

The problem is, IA AC loadline boosts the voltage, which is then reported as VID, then IA DC loadline DROPS The VID that is reported (but NOT THE VOLTAGE), So at full load, you wind up, if IA AC DC are both set to auto (179 in bios or 1.79 mOhms), you wind up seeing a MUCH lower VID than what the processor is actually getting !

the high VIDS (very high spikes) you see are at idle and very very very light load, because the IA AC setting boosts the VID when load changes from idle to light load, but the IA DC setting does not 'drop' the VID because the current is too low. So you can see a VID boost of about 125mv (close to your undervolt setting).

Setting AC Loadline to "1" prevents the actual CPU voltage from rising at full load, and this gets reported as "baseline" VID (Before the DC setting is applied), and setting DC loadline to "1" prevents the VID (not voltage, but just reported VID) from dropping at full load, under the idle setting.

Why does the "DC" setting even exist? Because Intels' design documents, on boards with "loadline calibration" disabled, require the voltage to "drop" at load by a certain amount of mOhms of resistance, to counter voltage spikes going from idle to load changes. But MSI uses a "hidden"and unchangeable loadline calibration setting which is hidden to the user, which already stops "vdroop" from happening. So then you wind up getting "Vrise" with the VID being reported completely incorrectly because of it.... -

@Phoenix Does the latest i9 version of the GT75(the one you have) still have the MUX switch to switch to iGPU only mode?

-

Spartan@HIDevolution Company Representative

Negative. The button which used to switch GPUs now is a customizable button to launch any app you want, Default app is Dragon Center.

The Intel GPU is still there but disabled in the BIOS and you cannot enable it.ssj92 likes this. -

No MUX switch but you can enable iGPU in the unlocked bios and run with dGPU disabled? @Falkentyne

Falkentyne likes this.

Falkentyne likes this. -

Spartan@HIDevolution Company Representative

You can't enable the iGPU on the new GT75Falkentyne and Papusan like this. -

Falkentyne Notebook Prophet

iGPU is enableable.

Only question is will it output to the LCD or will it black screen? And someone else is going to have to test that. Note there are *TWO* fields which allow GPU selection.

I know the iGPU is enableable because in the GT73VR and GT75VR, in *LINUX*. you can have both the dGPU and iGPU enabled and able to be *RENDERED TO* at the exact same time. In windows, however, the iGPU will be enabled but the drivers won't load (it will appear in device manager but the Nvidia GPU will be the one active). -

Falkentyne Notebook Prophet

BTW "SG" stands for "Switchable Graphics". So it is possible to enable the iGPU. But if you black screen you're going to have to do the 45 second power button press to force a CMOS clear, until someone finds the exact combination of options to set the iGPU as the display output device to the LCD.

-

Without a MUX switch to connect the output of the iGPU to the display the iGPU is never going to be usable as a display device.

Without the ability to switch between the iGPU and dGPU, the dGPU is hardwired to the display.

If the iGPU were hardwired to the display, and the dGPU was hardwired to display through the iGPU, then that would be a form of Optimus, or whatever Windows 10 is calling it these days.

That last scenario is that horrible situation where the iGPU sets the output display parameters, and the dGPU is slaved to run through it even when the dGPU is being used for rendering.

With Optimus the iGPU is powered up and stealing power and thermal headroom from the CPU sitting next to it on the carrier and ruining the chances for great performance from the CPU.

The active iGPU is blocking the abilities of the dGPU - no G-sync, no color or resolution control from the dGPU, it just keeps getting worse and worse from there.

But, with Optimus you might get an extra 30-60 minutes of battery life. Last edited: Jul 27, 2018Pedro69 likes this.

Last edited: Jul 27, 2018Pedro69 likes this. -

Falkentyne Notebook Prophet

There is no mux switch in the GT73VR or GT75VR either. It's Muxless". It even says in the unlocked Bios "Switchable Graphics Mode Select: Muxless".

It's all controlled by the EC (Embedded Controller). the Bios simply signals the EC what device to render to.

You can even use the EC to switch to the iGPU directly through windows on the GT73VR by hacking the EC RAM!!. But I forgot the value.

I think a value of "01" in EC RAM register F1 switches to the iGPU and value =04 switches to the dGPU. or maybe it's value=05. But it causes a boot cycle loop once.

So someone needs to try it. I bet $50 dollars it will work. And I'll eat a frog if it doesn't.

Remember MSI simply recycles old code. I am 99.9% sure you CAN switch to the iGPU. Either by messing with the unlocked Bios, or messing with Embedded Controller RAM register "F1".Talon likes this. -

Ah, here it is, you are looking at the BIOS options for Optimus-like mode, where the dGPU is connected through the iGPU, which the GT73 switchable iGPU / dGPU doesn't:

Hybrid Graphics

- MUX-ed: Displays are only connected to the iGPU or dGPU.

- MUX-less: Displays are always connected to iGPU; the iGPU uses the display engine of the dGPU solely for rendering, not displaying. In theory, the two GPUs act as one.

There's probably a better link, but that will do for now.

Muxless Graphics (Optimus/CrossDisplay) Must Die!

https://www.reddit.com/r/linux/comments/vosq5/muxless_graphics_optimuscrossdisplay_must_die/

"I've got a $2500 laptop order on hold right now thanks to Linus Torvalds. His "F Nvidia" clip got me to look into Optimus and realize that it really, really sucks for anyone who uses their computer for actual work (scientific visualization in my case).

Muxless supposedly saves power when you're not using the discrete GPU, but something that the marketing doesn't talk about is that it's less efficient when you are using the dGPU. If I'm trying to drive a 2560x1600 display at 60Hz I'm burning ~1GB/s of PCIe bandwidth copying the framebuffer to the iGPU, another ~1GB/s of main memory bandwidth updating the display, and I've incurred an extra frame of latency.

This isn't about open vs. closed software, it's about a horrible design foisted on the consumer by Intel (probably through abuse of its monopoly power). There is no way Nvidia/AMD willingly chose this design; it marginalizes their bread and butter product and imposes a significant performance penalty, making Intel's integrated GPU look that much better. Linus flipped off the wrong company IMO.

The right way to do hybrid graphics is to have a real video mux incorporated into the dGPU, with genlock (or possibly only framelock) between the dGPU and the iGPU so that video can be cleanly switched on a frame boundary. Everybody wins, the motherboard manufacturers have a clean design, low-end systems aren't any more complicated, Intel doesn't have to do anything and Nvidia/AMD don't give up performance on their flagship products.

In the mean time, I sincerely hope the Linux community votes with their dollars and says "no way in hell" to muxless graphics."Last edited: Jul 27, 2018Falkentyne likes this. - MUX-ed: Displays are only connected to the iGPU or dGPU.

-

Falkentyne Notebook Prophet

Well we still need someone to actually test the System Agent options to see if the iGPU can be set as the primary display device.

I'm still not sure why any GT75 and GT63 Titan owners haven't tried this yet.

The worst thing that will happen is a black screen, then press the power button down for 45 seconds and you're back to booting after 2 minutes of black screen+MSI boot loops.

The big question is if the "MSI GPU mode" function is still present in the EC even though it's not actually shown in the Bios.

The GT73VR and GT75VR did not have any of those options.

I'll post the screenshot again here. Because the help text wasn't showing when the dropdown menus were selected:

You can see the text it says.

So,

The question is:

1) The GT73VR and GT75VR did not have any of the 0x5E and 0x5F register offset fields options that are shown here. So these options clearly do something.

2) Does using RW Everything, clicking on the EC tab and changing the value of EC RAM register F1 from 00 to a higher value (01, 04 or 05) cause the system to boot loop and switch to the iGPU?

The "GPU" button in the GT73VR and GT75VR simply wrote to EC RAM register F1 and rebooted the computer. That's all it did.

3) Is the "MSI GPU Mode" setting simply hidden but still accessible in a different way by changing the options shown?

There are THREE "primary display" options, whch show as iGFX, PEG, PCI or SG, all with the same four options.

To switch to the iGPU, you first have to enable it

Change "Internal Graphics" from Auto to enabled (this also forces the iGPU to activate on the GT73VR, but windows will not load the drivers if the dGPU is active. Linux will, however).

Then try switching 1, 2 or all three of the "Primary display" settings to iGFX, save, power off, and hope you get the iGPU

Anyone want to try? -

That's the problem with "unlocking" the BIOS, you see all sorts of stuff that doesn't necessarily apply to your build or laptop...

If MSI wired up the GT75 iGPU to be able to use the display output, they would have followed through with giving it to the owners to use. There's no reason for them not to.

I guess you could install MSI SCM on the GT75 and see if it works too...you can switch from within the app, you don't need a physical switch wired up. Last edited: Jul 27, 2018

Last edited: Jul 27, 2018 -

Falkentyne Notebook Prophet

SCM doesn't allow you to switch the graphics renderer from the program. The switcher is mapped to case button #2.

It just sends a popup confirmation (Press OK to reboot to switch to integrated graphics), then it programs the EC (EC RAM register F1) and reboots. The popup code was wired to the button above the cooler boost button. But now that button opens Dragon Center instead.

The easiest way to see if the "MSI GPU Mode" feature is still present is to just download RW Everything and program values into EC RAM Register F1. Value "00" seems to be "current" or "default", but 01 switches to the dGPU (I THINK) and either 04 or 05 switches to the iGPU. It may have been the other way around however; I found out when I was bored and messing around changing random EC RAM registers and noticed the Nvidia card was disabled after rebooting

You then have to reboot manually and see what happens.

If that doesn't work, then you have to mess with the System Agent options.

I mean MSI has the iGPU drivers available for the GT75 but with no "noob friendly" way to switch to the iGPU. But I'm VERY sure you can switch to it. MSI recycles code. I know because you can disable Battery Boost "NOS Throttle" even on the old GT70 by physically disconnecting the battery (this causes reduced power allowance because NOS disables itself) changing two EC RAM registers (31="09" and 42="64"), which will re-enable NOS despite the battery being removed.

There's no risk to messing with those options anyway. If you black screen, you do the 45 second power button solute to Putin & Trump

-

Hey guys i can get this pc for 3000e is it good price

https://m.ruten.com.tw/goods/show.php?g=21825244643360 -

Spartan@HIDevolution Company Representative

They don't have the Intel Graphics Driver listed for the new GT75 Titan, they did though for the GT75VR Titan Pro which is a totally different laptop.

MSI GT75 Titan 8RG Drivers

![[IMG]](images/storyImages/2018-07-28_001350.jpg)

![[IMG]](images/storyImages/fedwkc5.jpg) Last edited: Jul 27, 2018Falkentyne and hmscott like this.

Last edited: Jul 27, 2018Falkentyne and hmscott like this. -

The SCM app used to be able to switch GPU's from the app without touching the switch under Windows 8.1. It looks like that feature is missing from SCM under Windows 10, and I couldn't find any mention of how to do it from the SCM app in the current manual.

The GT75 doesn't have an Intel GPU download.

The GT75 only has VGA Nvidia Drivers on the support page:

https://www.msi.com/Laptop/support/GT75-Titan-8RF#down-driver&Win10 64

The GT73 has both Nvidia and Intel Drivers for VGA on the support page, and the SCM Utility:

https://www.msi.com/Laptop/support/GT73VR-7RF-Titan-Pro.html#down-driver&Win10 64[/QUOTE]

Again, why would MSI wire up the iGPU to the display path and not provide a way to use it?

I think it's another waste of time. I'm out of this one.

Yup. The Intel 7th gen CPU GT75VR 7RF Titan Pro has SCM and Intel drivers:

https://www.msi.com/Laptop/support/GT75VR-7RF-Titan-Pro#down-driver&Win10 64Last edited: Jul 27, 2018 -

That looks like a Taiwan / Asian market only configuration, no listings for pricing here in the US for the 084 model.

There are a bunch of other for sale hits on google, so shop around.

*** The Official MSI GT75 Owners and Discussions Lounge ***

Discussion in 'MSI Reviews & Owners' Lounges' started by Spartan@HIDevolution, Jun 23, 2017.

![[IMG]](images/storyImages/vh40ph.png)